In the world of web development and internet crawling, the robots.txt file is an unsung hero that helps manage how automated bots interact with a website. If you’ve ever wondered how search engines or web crawlers know which pages to index or skip, or how website owners control that access, the answer lies in the humble robots.txt file. This article will delve into the history, importance, and usage of robots.txt, giving you an in-depth understanding of why it’s such a vital tool for both website owners and web crawlers.

What is Robots.txt?

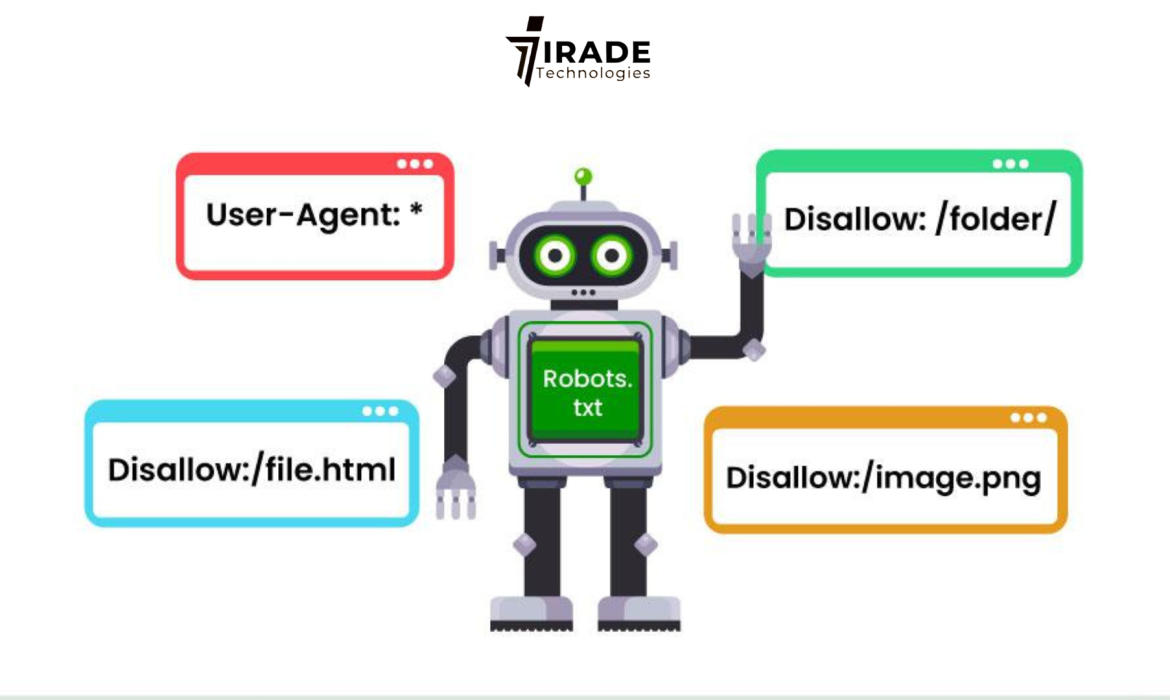

At its core, robots.txt is a plain text file stored on a website’s server that provides instructions to web crawlers (also called spiders or bots). These bots are automated programs that browse the internet to index web pages for search engines, collect data, or perform other tasks. The robots.txt file tells these bots which areas of a website they are allowed to access, and which they should avoid. Essentially, it serves as a gatekeeper, allowing website administrators to control the traffic of web crawlers.

Most websites have a robots.txt file, and it’s usually located at the root of the website. For example, if you wanted to view a site’s robots.txt file, you would simply add /robots.txt to the end of the site’s URL. So, for Google’s robots.txt file, the address would be developers.google.com/robots.txt.

How Does Robots.txt Work?

A robots.txt file operates on a simple premise: it consists of directives that tell web crawlers what they are permitted to crawl and what they should refrain from crawling. This control is achieved through two primary directives: User-agent and Disallow.

User-agent: This refers to a specific crawler or bot. Each bot identifies itself with a user-agent string. For example, Googlebot is Google’s search engine crawler, while Bingbot is Bing’s. This allows website owners to target specific bots with their instructions. If a site owner wants to give different rules for different crawlers, they can do so using separate “User-agent” entries.

Disallow: This directive tells the bot not to crawl a particular directory or page. For example, if you don’t want a bot to crawl your “admin” section, the robots.txt file could include a line like:

Disallow: /admin/

The combination of these two elements gives website owners a great deal of control over which content is available for automated crawling. For instance, a website might want to block certain pages (such as login or registration pages) from being crawled to avoid unnecessary strain on the server or to prevent the indexing of sensitive information.

While robots.txt can control a crawler’s access to a site, it’s important to note that it doesn’t provide any security measures. It’s simply a guideline for well-behaved bots. Malicious crawlers or those that disregard the rules can still access restricted content if they wish. However, most commercial bots, like Googlebot, will respect the instructions given in robots.txt files.

Why Do Websites Use Robots.txt?

There are several reasons why website owners choose to implement robots.txt files, ranging from optimizing crawling efficiency to improving server performance. Here are some key benefits:

Crawling Efficiency: Websites can have hundreds or even thousands of pages. Automatically crawling all of them may not be necessary or efficient, especially for large, dynamic sites. Robots.txt allows site owners to direct crawlers to relevant pages, improving the overall efficiency of the indexing process.

Avoiding Overloading Servers: Some websites generate pages dynamically, meaning they can create a significant load on servers if crawlers attempt to access every URL. By using robots.txt, administrators can prevent these pages from being crawled, ensuring that the servers aren’t unnecessarily burdened.

Preventing Indexing of Sensitive Content: Websites that contain private or sensitive information, such as login pages or administrative tools, may want to prevent these from appearing in search engine results. By adding “Disallow” rules for these areas, the robots.txt file helps keep them hidden from search engines.

Facilitating Focused Crawling: A website might want crawlers to focus on particular sections of the site that are most important for SEO purposes. For example, a website selling products may want its product pages crawled and indexed, but it might not want its “thank you” or “checkout” pages to be indexed. Robots.txt provides a simple way to enforce this.

The History and Evolution of Robots.txt

The concept of the robots.txt file dates back to the early days of the web. HTML, the language that powers web pages, was invented in 1991, and web browsers arrived shortly after. By 1994, the first robots.txt file was introduced, allowing webmasters to have some control over which bots could crawl their sites.

This early implementation of robots.txt predates even Google, which wasn’t founded until 1998. The format of the robots.txt file has remained largely unchanged since its inception, which speaks to its simplicity and effectiveness. Even today, a robots.txt file created in the early days of the internet would still be valid and function as intended.

Over time, the robots.txt format has become standardized and even evolved. For example, in 2007, search engines introduced the “sitemap” directive, which allowed webmasters to include a link to an XML sitemap within the robots.txt file. This development helped bots find and crawl the most important pages of a website more effectively.

In 2022, after years of global collaboration, robots.txt became an official IETF (Internet Engineering Task Force) proposed standard, solidifying its role in internet infrastructure.

The Flexibility of Robots.txt

One of the great advantages of robots.txt is its flexibility. It is a simple yet powerful tool that allows website owners to exercise granular control over their site’s content. Whether it’s blocking specific crawlers, directing bots to focus on certain areas, or preventing the indexing of certain pages, robots.txt can handle it all.

The format itself is also adaptable. New directives or modifications can be introduced over time to address the evolving needs of the internet. As new types of crawlers, such as those used for artificial intelligence, emerge, robots.txt will likely continue to grow and evolve to accommodate these changes.

Why Robots.txt is Here to Stay

Despite the availability of new technologies and tools, robots.txt is still a cornerstone of the web’s ecosystem. It’s simple, human-readable, and effective, with an established standard that has stood the test of time. While newer formats may emerge, it will likely remain a vital tool for years to come.

The global community’s continued engagement with robots.txt ensures that it remains relevant and effective. There are thousands of tools, software libraries, and communities dedicated to helping developers manage and understand robots.txt files. However, one of the beautiful aspects of robots.txt is its simplicity—it’s easy to edit and read, even without specialized tools.

Conclusion

In a world where web crawlers constantly scan the internet for information, the robots.txt file offers a simple but powerful solution for controlling and managing crawler access. By using it, website owners can optimize their site’s performance, avoid unnecessary server strain, and ensure that sensitive content remains hidden from search engines. With its long history, flexible format, and active community, robots.txt is an essential tool that’s here to stay.

For those eager to learn more about the inner workings of robots.txt and how to fine-tune their web crawlers, the ongoing Robots Refresher series will continue to provide valuable insights. Stay tuned for future installments that will dive deeper into advanced use cases, best practices, and the latest trends in the world of web crawling.

Related Posts

Best Landing Page SEO Services in India

Landing Page SEO Services Boost your landing page performance with IraDe Technologies’ expert Landing Page SEO Services. We optimize for high search rankings, fast load times, mobile-friendliness, and...